Portfolio Website

About

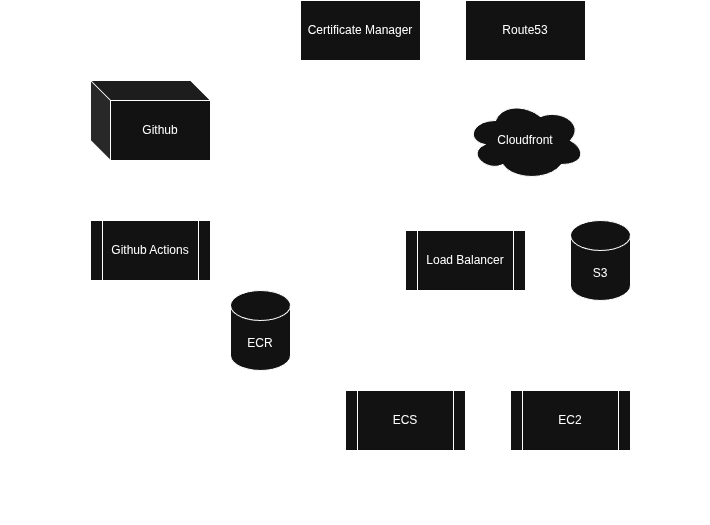

This website is an entire project in itself. Parts of its infrastructure are more robust than what is strictly necessary for what it currently is. This was intentional, allowing me to build out other projects ontop of the infrastructure built for this site, as well as showcase some of my knowledge of designing and building such systems.

Structure

Core

The core of the website is built using jekyll and minimal mistakes (ruby based). The reason for this is that Front end development is the one area I have not spent much time on, and I honestly struggle to find motivation to deepen my understanding. These two tools basicall allow me to write pages in markdown using macros for styling, and allowing me to customize the css for fancier styling in cases I deem worth it.

All code for the website is hosted on github, and deployed autonomously via github actions. The site is actually packaged into a docker container and deployed as a container. This provides easy development on various platforms with consistent results, and easy environment management.

Cloud Infranstructure (The Meat)

All cloud infrastructure is hosted on AWS. I have experience using Azure and Google Cloud as well, but AWS provides the most robust solutions to cloud infrastructure.

There are two domains that point to the same website: reid.wiki and reidrussell.io (although reid.wiki is considered the core domain). These domains are provisioned and managed in Route53 in AWS. Certificate Manager provisions and links SSL certificates to enable HTTPS connection. Cloudfront operates as a CDN, WAF, and provides some level of protection (mainly against obvious attacks like DDOS). S3 hosts the content like images, videos, and gifs (which are cached and distributed by CloudFront). For a request that is not grabbing assets, the connection is routed to an application load balancer, which operates more as a dispatcher than an actual load balancer (at the moment). There are some services I run that are very much in beta or purposefully private- the LB will route these connections to the relevant server.

As I said previously, the website is packaged and run in a container, so the cloud service used to host this is ECR for container images, and ECS for actual runtime environments.

Running the website in ECS behind a load balancer allows me to set dynamic scaling rules to spin up multiple containers in the event of allot of traffic (although for a static site like this it is probably not necessary). Containerizing the website allows me to develop and test locally on different machines while providing consistent results when deployed.

The simplest and most cost effective way to run this would have been putting everything in an s3 bucket and serving it as a static site, however I purposefully chose to create a more robust architecture to showcase my capability to do so and allow expansion into other services.