The Drone Project

Background

I consider this my core, main project. This is a project that has been in my mind for years, but that I didn’t really start working on until around 2019. There are many directions I want to go with this project, but many require skills that I either do not posses or do not have sufficient experience in to jump straight in. Because of this, I have tried to select specific applications I want to develop in a certain sequence so that the lessons learned and skills gained from one lead into another. Many of the other projects are small stepping stones towards eventual goals that are aimed at converging with this project in a future application.

History

As a kid, I loved playing with RC planes and helicopters, but I always wanted to make them do something useful. During college, I joined the robotics club- where we developed a very large drone and I had the opportunity to contribute to the flight planning software. In my junior and senior years, I didn’t participate in the club, but still felt the need for an outlet for my interest. As I thought about what I wanted to accomplish with building a drone, I began to realize that there were many unknowns I would have to figure out to get to the level of tackling some of my ideas. So I decided to start small and build from there.

First Drone

For my first drone, I decided to build a small 5” FPV quad. The main goal of this initial project was to get my feet wet, learn the hardware that goes into a drone, understand what the different components do, and get some low-risk experience in the common pitfalls with building drones. It was definitely super fun to fly around once complete, but I very quickly got the same feeling of “I want to do something useful with this”. I began to seriously consider the roadmap and started designing the next iteration.

Motivations

As I mentioned before, the interest in remote controlled craft has been present since childhood. Over the years it has certainly evolved, and in the past 8 or so years has converged into several specific areas of interest. The goals can be broken down into 2 categories:

- Commercial

- Defense

On the commercial side, there are two areas that (from my perspective) most companies are working on in the space: drone delivery and agriculture (mainly spraying). I would say most of the applications I want to explore on the commercial side do not fall into either of these categories, but I do eventually want to explore a cargo application and data gathering in agriculture.

For defense, its obvious that low-cost, small drones are becoming an effective piece of the battlefield. There are several companies working on offensive and defensive applications in this area, but I feel that there are still many gaps in current capabilities (especially on the counter-uas side). This is something I think about allot, and have allot of ideas on.

Current Application

Abstract

The focus of this iteration is wildlife management. The primary goal of the project is to develop a system that autonomously searches a given area and, using a thermal camera, finds wild pigs (hogs) . The idea is to detect animals and then run them through a classification model (running at edge) to determine what the animal is. If its a hog, send me a notification.

A secondary goal would be to have the system autonomously then track herds (animal agnostic) and over time (many flights) build a visualization or something that shows you where certain animals are typically coming from, going through, and going to on your property. This could be tracking deer movements or even getting data on cow herds.

An additional goal that is seperate from the main goal is to develop a precision landing system using a downward facing camera with ranging capabilities and an IR sensor. The main reason for this secondary goal is to increase reliability- especially in cases where the drone doesnt know what to do or is low on battery or has some other emergency. I want to be confident that if I put a bunch of expensive components on a drone, additional safety measures are available as guardrails.

Skills and Experience Being Targetted

- Programatic Control And Path Planning

- Interfacing Between Companion Computer And Flight Controller

- Long Range Techniques

- Long Range Video Streams

- Onboard Video Processing / Edge Image Classification Techniques

- Working With Thermal Video

- Precision Landing Techniques, Algorithms, Hardware Requirements, Implementation

- Many Electrical Items (Powering Periferals, Power Management, etc)

- ROS

- Gazebo / Simulators / Simulated Data

Sub Projects

Ground Station

The first few test flights annoyed me enough to start a whole sub-project. I use QGroundControl for the ground station software, and for some reason it doesn’t run on many computers very well. If an operating system is too up-to-date, I’ve found it typically won’t work. It runs best on Windows machines, but I ultimately need to run it on a linux machine because WFB-NG (what I’m using for video TX) only runs on linux, and it would be better if I need to run a ROS node on the GS.

After I find a suitably old or out-of-date operating system computer, I also have to juggle a large battery (to power the radio), the radio, my joystick controller (old ps4 controller currently), and a bunch of cables. The whole process is currently very unorganized and hard to set up.

Because of these greviances, I have decided to build a ground station. The idea is to build a rugged, self contained, all-in-one system that I can easily carry anywhere and deploy very quickly. Ultimately these requirements led me down the path of desiging custom inserts that will fit into a pelican case. The pelican case will have a large battery and power management system, and the computer will be a mini PC running Ubuntu. There will be a screen mounted on the inside of the lid of the pelican case, and the radio and various switches will be mounted to the inserts. I also want some storage space to carry the flight battery.

Currently, I have ~90% of the main base plate modelled out in CAD. I got hung up on mounting a joystick, because anything too tall won’t allow the lid to close (and most easily acquired are too tall) and so I started to develop a folding mechanism to allow the user to store it. This has kind of stalled out, and I might scrap that mounted joystick idea for the 1st iteration in favor of more storage space and just using the old ps4 controller.

I still have yet to figure out the power management system that will need to be built or bought- Ideally I would be able to just plug it in without removing the battery and hooking it up to a fancy LiPo charger, but I don’t have a ton of domain knowledge in that area of electronics. The hope is to have this 100% done by the next test flight (end of Development Phase 2), but this might end up being a longer one.

Image Classification

Image classification is one of the final pieces of the project. Because of this, not much work besides research has been done on this yet. I have acquired a Flir Boson, and have even set up basic object detection, but have yet to design the software architecture for this. Ultimately I will need to build or get a thermal image dataset to train a classification model on. I do not have high hopes for a thermal dataset of pigs and deer from the air, but maybe i’ll get lucky. Most likely, I will need to manually fly the drone around at night and record images of deer and hogs to train with.

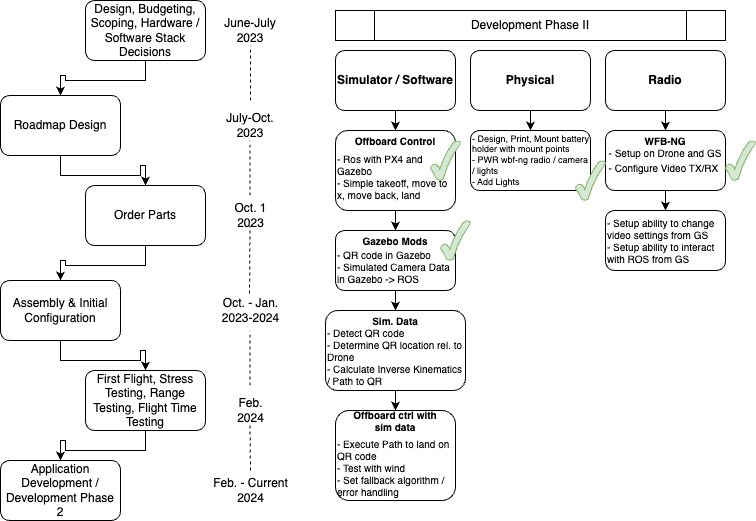

Roadmap

The following is a high level history of this iteration and the current major milestones I am working towards. This doesn’t cover all of the steps and milestones it took to get to this point, but gives a view into the immediate goals.

Current State

The initial phases of the project were focussed on the drone itself. I wanted to build a platform from which I can build future iterations aimed at different applications. For that reason I purposefully designed it to be ‘more’ than I needed for this use.

Currently, it flies. I have tested the range out to about 2 miles with no degredation in signal connection, theoretically it should be able to go up to 60km line-of-sight. I have tested the flight time out to 45 minutes, but battery voltage began to drop exponentially at that point- so I dont trust the estimated battery %. I can give it gps coordinates and have it autonomously fly a flight path at various speeds. It does well in wind, though I have only tested it in conditions with gusts < 25kts. The auto-landing feature works, but its not great- the precision landing system should make this rock solid.

The precision landing camera, companion computer, LEDs, and video TX antennas are all mounted but have yet to be flight tested. The landing camera, video TX antenna, and LEDs have to be powered straight from the power distribution board because the companion computer doesn’t put out enough power. Im currently running into a ground loop issue when the video TX antenna and landing camera are powered via PDB and connected to the companion computer. Basic LED control and sequencing is complete, but I have yet to hook up control so that it can be manipulated from the ground station.

On the software side, I have a copy of my drone running in a simulator and have basic offboard control working. I am hung up on getting simulated sensor data out of gazebo into ROS so that I can work on the precision landing algorithm- this is my main bottleneck in development right now.

Unfortunately, I was hoping to have been done with Development Phase II by June 2024 and to spend the summer flight testing, but I ended up not being able to work on the project from mid-March to June. Now, the timeline has shifted such that I am hoping to be completely done by the end of 2025.

Development Phase II Goals

- Basic offboard control tested in flight

- Precision landing algorithm testing on drone - detect and land on QR code landing pad

- LEDs for night flights and with different sequences to indicate different flight modes or conditions

- Full simulator testing ability

- Video streaming to GS / Range testing video

- WFB-NG operating as control / data link as backup for radios

- Initial offboard control guardrails and fault tollerance testing

Hardware Stack

- HolyBro X500 Frame - This is a 10” frame, but offers allot of area and mounting points for periferals. There are several things I don’t like about this frame, mainly the innaccessibility of the space under the top plate. You have to take off all of the arms to take off the top plate and the arms are connected by being sandwiched in between the top and bottom plates. This makes mounting things in between the two plates a pain, so I will not be using this frame for my next build.

- 12” Props - While the frame is meant for 10” props, it fits 12”. I specifically added larger props and larger engines so that I can carry heavier payloads and a larger battery.

- Pixhawk 6x Flight Controller - A solid flight controller capable of running either px4 or ardupilot.

- GPS

- Battery

- CUAV P8 Radios - Very powerful radios with a stock range of up to 60 km. With a modified ground station antenna you can supposedly get up to 100 km range.

- Custom Battery Holder - I oversized the battery to the point that straps didnt really work. Instead, I designed and 3D printed a container for the battery that easily mounts to the bottom plate of the frame and has mounting points all over it for payload.

- Alpha Wifi Antenna - Using this for long range video transmission, it requires allot of power so I had to get creative on power management.

- Raspberry Pi - Currently, I have a raspbery pi 4 (Because ROS only supports Ubuntu 22 and you can only get Ubuntu 24 on the RPI 5) mounted to the side of the drone that controls the led strips, runs WFB-NG for video transmission, and runs ROS for ‘offboard control’ (programmatic control) / flight controller interfacing.

- NVIDIA Jetson Orin Nano - A very powerful edge compute device tailored for machine learning. This will allow me to do image classification on the thermal images.

- Intel Realsense D435i Depth Camera - This is a downward facing camera (on a custom 3D printed mount) with a RGB camera, infrared camera, and point-cloud depth data as well as accelerometer data. The primary goal of this camera is to facilitate the secondary goal of the project which is precision landing capability. There have been several academic papers written on the subject using similar cameras with only a camera, or a camera and infrared sensor with an infrared emitter on the landing pad. I think incorporating the ranging data for a more accurate altitude estimation close to the ground will be beneficial to include.

- Flir Boson Thermal Camera - This is not on the drone yet, and I was not planning on getting a thermal until Development Phase 2 was complete, but I found a good deal on a second hand Flir Boson and jumped on it. I have tested it, and it works great. Originally I thought I was going to have to spend several thousand on a high quality thermal, but from initial tests I think this will be good enough quality for my application. I still need to figure out a gimbal.

Software Stack

Flight Controller

The flight controller is running the open source PX4 architecture - This is great for getting up and running really quick and making basic flight capabilities ready-to-go out of the box. It also has good documentation and provides several software interfaces that can be used for control or gathering data. The ROS integration is great, and provides low level control as well as high level commands.

ROS

Im using the Humble Hawksbil ROS distro for the control architecture. The main reasons for this are:

- I have some prior experience with ROS

- I wanted to learn more about / get better at ROS

- PX4 has a nice built in ROS interface

- ROS comes with gazebo for testing in a simulator, and PX4 has prebuilt scenes and plugins for gazebo

- It provides pretty much everything I need

ROS does have its downsides- mainly the large amount of setup and boilerplate environment stuff that goes into it, but once you get setup its pretty nice to work with. It also has a good community and lots of documentation.

Gazebo

For the time being, Gazebo is my simulator of choice. I will say, I don’t find it very intuitive (maybe because I haven’t worked with sims before). It comes with ROS which is nice, and PX4 has pre-built drones models (including the x500 frame) you can build off of though, so allot of the boilerplate work is already done- making it a tough sell to switch.

This is currently where I’m a little hung up on the software side. I have successfully tested several simple offboard control (ROS controlled) programs that make the drone takeoff, fly around, then land at its takeoff point, but I’ve gotten to the point where I need to write some custom plugins to get simulated data. The goal here is to get a precision landing algorithm working and begin to iron it out. I have a Realsense D435i model attached to the drone, but am having trouble getting simulated sensor data from it out of Gazebo and back into ROS, which would run a process to detect a QR code, calculate where it is in relation to the drone, and then plot a course to land on the QR code. I have the QR code in the scene (which was much harder than I though it would be), but I’m hung up on this point. This is one of those things that I didn’t really think about when starting off / planning the development, but I will definitely need to be doing similar things in the simulator in the future so its something I am aiming to get comfortable with.

Next Milestone

The next test flight is scheduled for the first half of 2025. It will demonstrate offboard control (ROS) flight, precision landing, and lighting control as well as failsafe testing. I will also be testing the range of the live video stream from the landing camera.

Development Phase III

Thermal Camera

I am using a FLIR Boson+ camera as the thermal imager. Initial testing has been very fruitful and I’m confident this device will get the job done. I have yet to determine the gimbal system that this camera will be attached to.

Image classification

I am in the very early stages of determining the classification architecture that will be used for image classification. Because of the niche aspect of the application, I expect I will need to manually build a thermal image dataset to train on.

Edge Computing

The image classification model will be running on a NVIDIA Jetson Orin Nano. This is a super powerful, yet compact, edge compute device that is designed for machine learning tasks in mind.

Notification

I have yet to determine the mechanism for notifications as this is one of the very last pieces that will be implemented.

Future Drone Projects

Swarms

There are a number of specific applications I want to explore using swarms, but at the core I want to build a high level control architecture to allow the end user to give generalized commands or tasks and have the specific control for each drone be determined by lower level processes that have been abstracted away. Im sure there has already been a good amount of work done here, but I feel as if there is still room for innovation in the space. With this, there are specific communication protocol designs I want to explore to optimize swarms. Specifically, I have been thinking of a “Drone Area Network (DAN)” For several years. A DAN will be a dynamic topology network that is self assembling and mutable to enable UDP and TCP types of connections.

Heavy Lift / Cargo

This is kind of self explanatory, but the ultimate “moonshot” of this would be a drone designed to attach to 20ft or 40ft shipping containers, with a goal range of ~150 miles. Obviously this is something that will take allot of money and mostly custom components, so this goal is very much back-burnered until I have a better grip exactly how I would achieve this- I would likely need to raise a fund to tackle this one.

Magnetotellurics

You can see a more on this in the Antenna Project page. Basically I want to do resource exploration using magnetotellurics using drones swarms. Currently there is 1 company doing something similar (Expert Geophysics), but this is a wide open market that I think can be very valuable for various industries.